Published on October 14, 2025 · 8 min read

Exploring Hugging Face: The AI Platform Democratizing Innovation

A Platform That Changed How We Build AI: Hugging Face is transforming AI from a niche for experts into a playground where anyone can build, experiment, and innovate.

– By Abinesh Kumar, AI/ML Developer, Yuvabe Studios

Answer Summary:

Hugging Face is a community-driven AI platform that makes machine learning accessible through open-source models, datasets, and tools. It enables beginners, developers, and businesses to build, fine-tune, and deploy AI applications quickly without heavy infrastructure.

When we think of AI innovation, a handful of names come to mind — OpenAI, Google, Meta. But in the open-source AI community, one platform has quietly become the backbone for thousands of AI projects across the world: Hugging Face. What started as a chatbot company has now grown into an ecosystem that fuels everything from text summarization apps in startups to computer vision systems in global enterprises

For us at Yuvabe Studios, Hugging Face represents something more: a gateway to democratized AI. It’s not just for PhDs or data scientists — it’s for designers, marketers, researchers, and anyone curious enough to experiment.

What Is Hugging Face and Why It Matters?

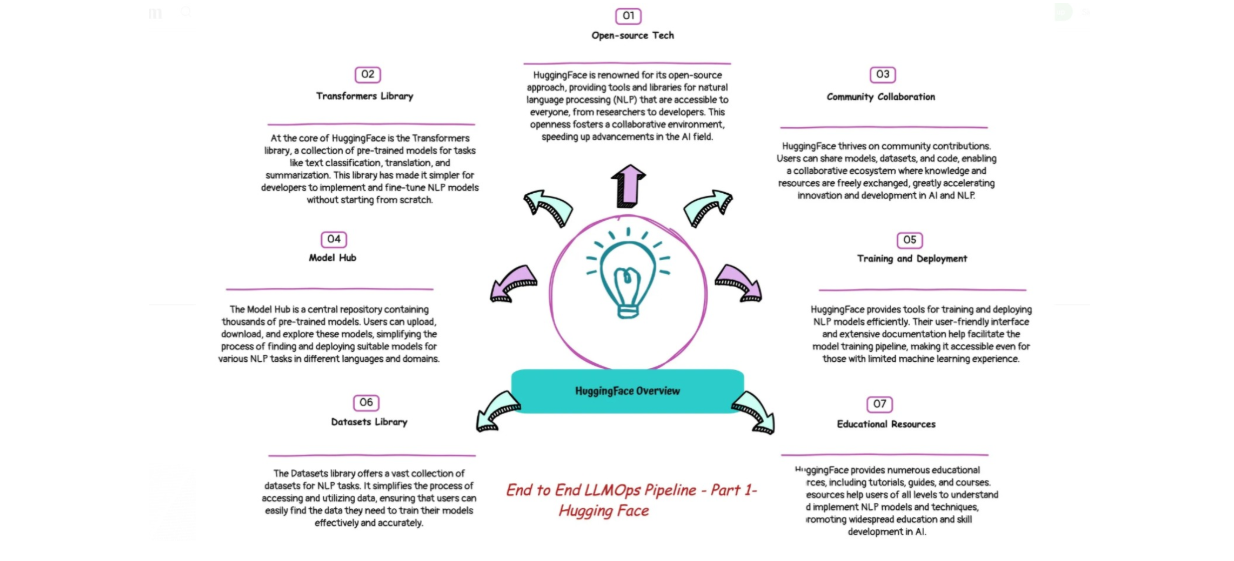

At its core, Hugging Face is a community-driven AI platform offering tools, models, and datasets across multiple domains:

- Computer Vision: Pre-trained models for image classification, object detection, and more.

- Audio Processing: Speech-to-text, audio classification, and transcription tools.

- Text-to-Image Generation: Tools like Stable Diffusion for converting words into visuals.

- Multimodal AI: Models like CLIP that combine text, images, and audio in novel ways.

But Hugging Face is not just a collection of tools; it’s an ecosystem where innovation happens collaboratively.

How Hugging Face Democratizes AI Development

The AI space is crowded, so why does Hugging Face matter so much?

- User-Friendly: Intuitive APIs mean you can run advanced models with just a few lines of code.

- Community-Driven: Thousands of developers worldwide contribute models, datasets, and improvements.

- Versatile: Whether you're building a sentiment analysis chatbot or deploying a text-to-image art generator, Hugging Face has you covered.

- Production-Ready: With Hugging Face Spaces and model hosting, it bridges the gap between experimentation and deployment.

Think of Hugging Face as the “GitHub of AI”— a hub where ideas become shared, reusable, and scalable solutions.

Core Tools in the Hugging Face Ecosystem

If you’re new to AI, Hugging Face is an ideal starting point. We’ve seen interns at Yuvabe, with no prior coding experience, build working sentiment analysis apps in a single day using Hugging Face pipelines. Here’s a quick guide to using Hugging Face to perform sentiment analysis with a pre-trained model:

Step 1: Install the Transformers Library

pip install transformersStep 2: Load a Pre-trained Model

Hugging Face makes it incredibly easy to load and use models. Here’s an example using the sentiment-analysis pipeline:

from transformers import pipeline

# Load the sentiment-analysis pipeline

classifier = pipeline("sentiment-analysis")

# Perform sentiment analysis

result = classifier("I love using Hugging Face!")

print(result)

# Output: [{'label': 'POSITIVE', 'score': 0.9998}]Step 3: Experiment with Other Tasks

You can easily switch to other tasks like summarization or question answering by changing the pipeline type:

from transformers import pipeline

# Summarization example

summarizer = pipeline("summarization")

text = "Hugging Face provides an amazing library for AI tasks. It is simple to use and highly versatile."

summary = summarizer(text, max_length=30, min_length=10, do_sample=False)

print(summary)Step 4: Keep Exploring

That’s all it takes — no GPU clusters, no weeks of training. Within minutes, beginners can test pre-trained AI models. Hugging Face also supports tasks like question answering, translation, and text generation, making it an educational goldmine for newcomers in AI.

Getting Started with Hugging Face

Hugging Face is more than a library, it’s a layered ecosystem:

- Transformers Library: Powering BERT, GPT, RoBERTa, T5, CLIP, Stable Diffusion, and more.

- Datasets Library: Thousands of developers worldwide contribute models, datasets, and improvements.

- Hugging Face Hub: A central repository for sharing and managing AI models.

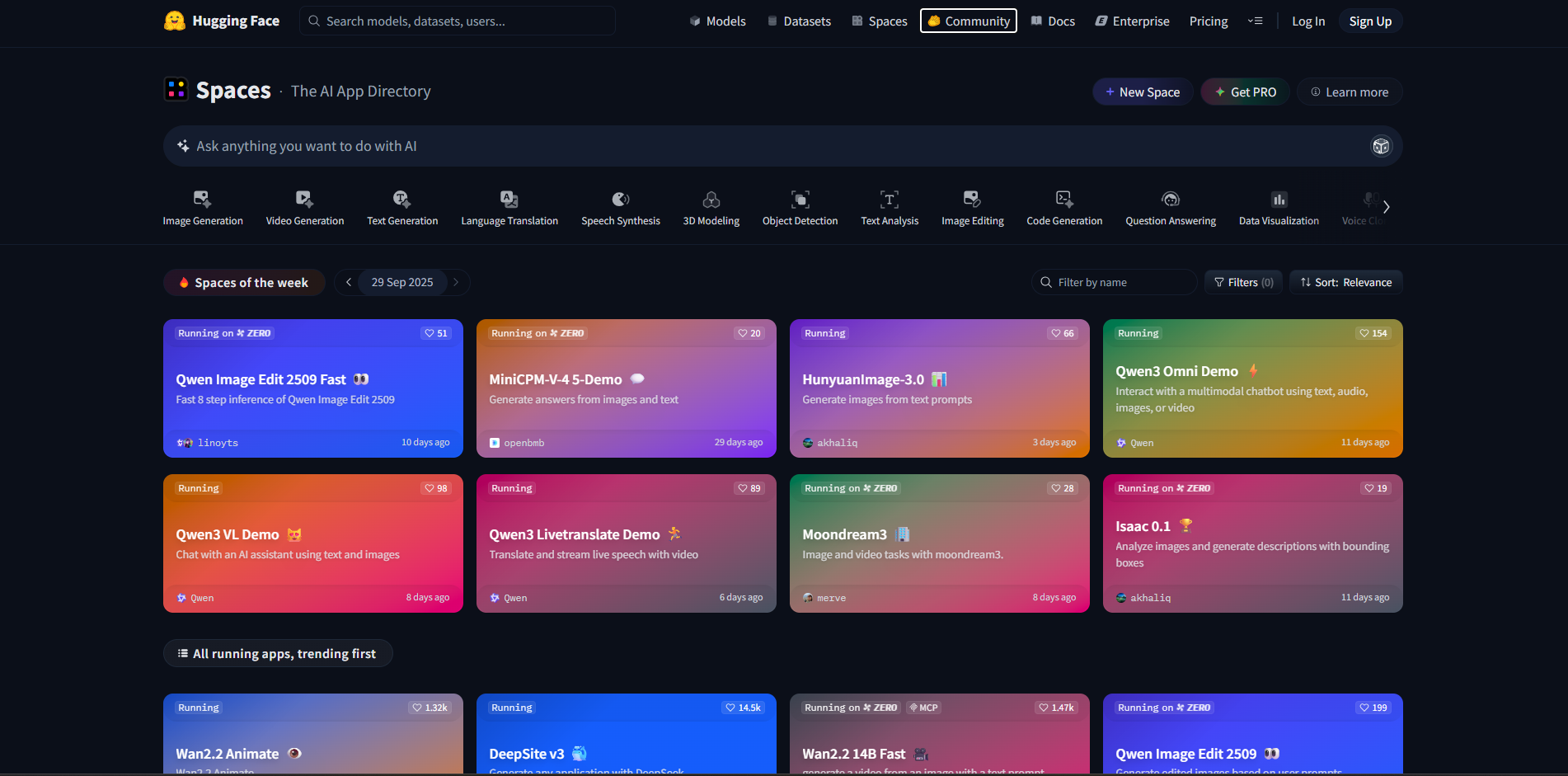

- Spaces: A no-code/low-code platform to deploy models as interactive web apps.

Imagine uploading your custom AI model to Spaces and letting anyone, anywhere, test it with a click. That’s the kind of accessibility Hugging Face enables.

Deploying AI Models with Hugging Face Spaces

Traditionally, deploying AI meant wrestling with servers, Docker images, and endless configurations. Hugging Face Spaces changes that.

- Create an accounthuggingface.com

- Start a new Space and choose your framework (Streamlit, Gradio, or Static)

- Upload your code and dependencies. Spaces handles the build.

- Share the link. Your model is now a live web app.

We've used Spaces to quickly demo prototypes for clients, turning abstract concepts into clickable, testable tools in hours instead of weeks.

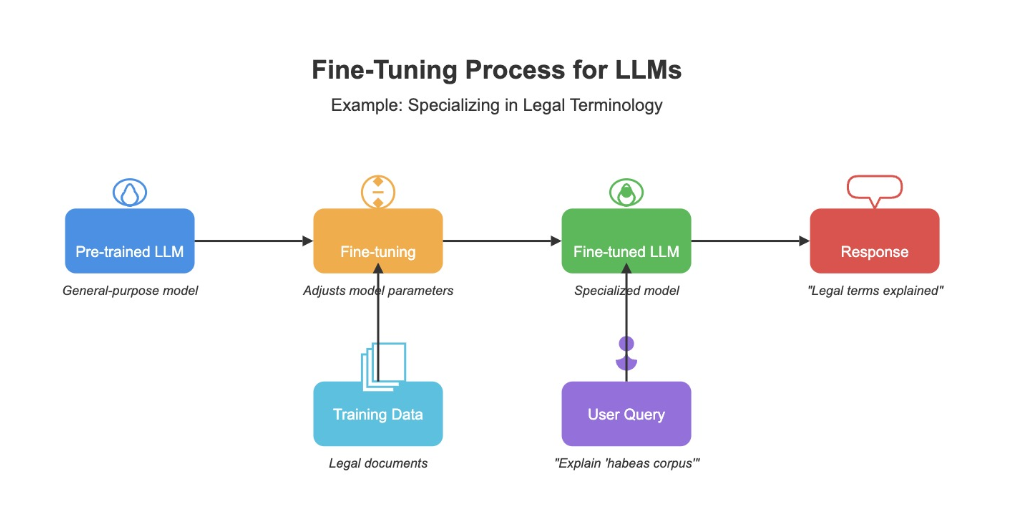

Fine-Tuning Models for Real-World Use Cases

Pre-trained models are powerful, but fine-tuning them on your own data unlocks real business value. Hugging Face’s Trainer API makes this process far less intimidating:

from transformers import Trainer, TrainingArguments

# Define training arguments

training_args = TrainingArguments(

output_dir="./results",

num_train_epochs=3,

per_device_train_batch_size=16,

evaluation_strategy="epoch",

save_steps=10_000,

save_total_limit=2,

)

# Initialize Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

)

# Train the model

trainer.train()This capability is where enterprises see real ROI—custom models tailored to customer service data, product reviews, or domain-specific text.

The Future of Open and Collaborative AI

Local AI isn’t just about where models run—it’s about who holds the keys to intelligence. When you run an LLM on your own device, you control the data, the costs, and the speed. It’s AI that bends to your context, not the other way around.

We’ve seen this story before. The internet moved from mainframes to desktops. Music moved from CDs to MP3s. Photography moved from film rolls to smartphones. Each shift brought technology closer to people.

AI is now following the same arc. And just like those earlier revolutions, the winners will be the ones who learn, experiment, and adopt early.

At Yuvabe Studios, we believe this is the next chapter in making technology truly human—intelligence that’s not just powerful, but also personal, private, and always within reach.

Making AI Accessible, Practical, and Powerful

Hugging Face has done for AI what WordPress did for websites — taken something complex and made it accessible, collaborative, and scalable.

For beginners, it’s a safe space to learn, play, and experiment.

For businesses, it’s a cost-effective way to leverage pre-trained models such as Hugging Face Transformers and fine-tune them for specific needs.

For the global AI community, it’s a platform that accelerates open-source innovation.

At Yuvabe Studios, we see Hugging Face not just as a tool, but as a partner in innovation. It helps us bring AI closer to design, marketing, and research — making it relevant and practical for our clients.

Curious about how Hugging Face can power your business applications? Get in touch with Yuvabe Studios to explore how we can design AI solutions that work for you.

Frequently Asked Questions (FAQ)

Here’s Few things you need to know about LLM

Running an LLM locally means executing a large language model directly on your own device instead of sending data to cloud servers. This enables faster responses, better privacy, and greater control over how the model is used

Local LLMs are better when privacy, offline access, and cost control are important. Cloud-based AI is still useful for very large models or when teams need instant scalability without managing hardware.

Most small to mid-sized models can run on modern laptops or desktops. Devices with dedicated GPUs or Apple Silicon chips perform better, but optimized lightweight models can also run on CPUs

Hugging Face is an open-source AI platform used to access pre-trained models, datasets, and tools for tasks such as text generation, image processing, speech recognition, and AI model deployment.

Yes. Hugging Face offers simple APIs, pipelines, and hosted demos that allow beginners to experiment with AI models without deep machine learning expertise.